Nvidia touts its unified architecture and pretrained libraries for autonomous cars

Nvidia wants carmakers to adopt its single unified architecture for everything from software to datacenter tech to chips for autonomous vehicles. And the company is making its pretrained libraries of deep neural network data for self-driving cars available to its partners.

Santa Clara, California-based Nvidia hopes autonomy will spread across today’s $10 trillion transportation industry. But that transformation will require dramatically more computing power to handle exponential growth in the AI models currently being developed and ensure autonomous vehicles are both functional and safe. At CES 2020, the big tech trade show in Las Vegas this week, we’ll probably see self-driving vehicles that are good but not quite there.

Nvidia’s Drive AV is an end-to-end, software-defined platform for autonomous vehicles. It includes a development flow, datacenter infrastructure, an in-vehicle computer, and pretrained AI models that can be adapted by carmakers.

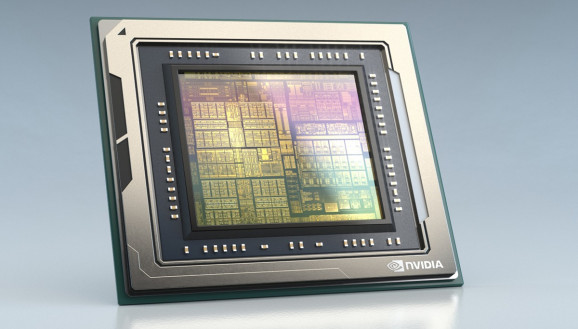

In December, Nvidia unveiled Drive AGX Orin, a massive AI chip with 7 times the performance of its predecessor, Xavier.

In a press briefing, Danny Shapiro, Nvidia’s senior director of automotive, said the company’s graphics chips gave birth to this kind of self-learning design that is now becoming the heart of artificial intelligence chips for autonomous vehicles.

“Everything in the $10 trillion industry will have some degree of autonomy, and that’s what we’re really working to develop,” Shapiro said. “What’s key to recognize is that it’s a single, unified architecture, enabling us to create these software-defined vehicles, and they get better and better and better over time.”

Samples of Orin will be provided in 2020 for testing, but the chip isn’t expected to ship in vehicles until 2022. It will be capable of 200 trillion operations per second and is designed to handle the large number of applications and deep neural networks (DNNs) that run simultaneously in autonomous vehicles, while meeting systematic safety standards, such as ISO 26262 ASIL-D.

Orin has 17 billion transistors, or basic on-off switches of computing, and is the result of four years of research.

Nvidia is now providing access to its pretrained DNNs and training processes on the NGC container registry. The company has named its pretrained libraries by function, such as “active learning,” “transfer learning,” and “federated learning.”

“Customers [start] with a pretrained model, then they take their data and retrain using our foundation,” Shapiro said. “And then with these tools they can optimize it, retrain it, test it, and create something that is efficient and customized for them.”

This kind of technology-sharing could work well with data privacy policies in places like China or other regions where data isn’t supposed to cross country lines. The carmakers could adopt the pretrained models and use them in their own local areas to get improved results for autonomous vehicles. They could then share that retrained model with Nvidia to use across the globe, without sharing the local data, Shapiro said.

Nvidia’s AI ecosystem includes members of Inception, a startup program focused on AI companies. More than 100 Inception members will be on the CES 2020 show floor.

And Nvidia Drive customer Mercedes-Benz will hold a keynote Monday night on the future of intelligent transportation.

Built as a software-defined platform, DRIVE AGX Orin is developed to enable architecturally compatible platforms that scale from a level 2 to a fully self-driving level 5 vehicle, enabling car makers to develop large-scale and complex families of software products.

Since both Orin and Xavier are programmable through open CUDA and TensorRT APIs and libraries, developers can leverage their investments across multiple product generations, Shapiro said.